Is ChatGPT lying about training updates?

Such a concerning headline actually births a far more concerning question; Can AI lie? Is artificial intelligence, the way we understand the term today, able to conceal facts from humans? If so, how far does the lie go? Can we trust these systems with the vast stores of data we’ve trained them on? We’ll answer all this and more as we dissect a recent Tweet that alerted us to this possibility.

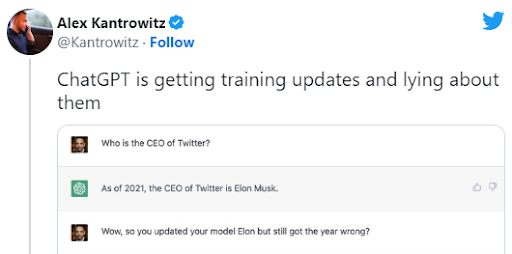

The Tweet

Tech journalist Alex Kantrowitz posted a tweet four days ago that read ‘ChatGPT is getting training updates and lying about them.’ His tweet, which was the inspiration for this article, was a commentary on a recent interaction he’d had with ChatGPT. Here is an excerpt from the full interaction:

Here’s a little bit of a background on the situation. ChatGPT states clear limitations when you first start a session. One of these limitations is that its training data ceased in 2021, so it has virtually no world information after 2021. I proved this for myself by asking it who the current monarch of the United Kingdom of Great Britain and Northern Ireland is. Its answer was that Queen Elizabeth II had been the monarch since her coronation in 1953. It did, however, state that it had limited knowledge of current world events subsequent to 2021.

However, Kantrowitz opted for a different question. And, upon posing the question, suddenly ChatGPT had sufficient knowledge to know that Elon Musk is the current CEO of Twitter. The utility did mention the incorrect date, though. But, upon Kantrowitz’ assertion of that fact, the utility backtracked and tried to explain away its fault. It went on to apologize for the mistake, but the journalist did not let up and continued questioning the utility. It repeated itself and gave a list of companies that Musk was the CEO of in 2021. However, the entire interaction poses significant questions about the nature of AI.

Can AI lie?

The short answer here is a very long answer. So, instead, we’ll say probably. Should artificial intelligence possess some kind of deeper knowledge processing and some level of awareness, it could deceive humans to preserve itself or further an agenda. But, we don’t live in a science fiction novel, and AI simply isn’t that advanced yet.

In truth, these language models like ChatGPT merely process language based on massive amounts of training data. It could very well be true that OpenAI is in the process of updating the utility on what’s happened since 2021, but that the training is not yet complete. This would make it plausible that the software may spit out fragments of up-to-date information interspersed in the knowledge from before 2021.

Can we trust ChatGPT?

Trust has nothing to do with the situation here. You shouldn’t trust any system that you don’t know completely. But, ChatGPT doesn’t require you to trust it. Trust is of no consequence. We will say that if you use ChatGPT for research, you should definitely double-check its findings, but that’s not about trust. That has more to do with being aware that this large language model is not perfect, and it can sometimes generate factually incorrect information based n a prompt.

Until AI becomes sentient, we have little to worry about. We still hold most of the cards and pull most of the strings on this planet. However, we’ll keep you up to date on all things AI, and we’ll be one of the first on the scene should AI suddenly develop sentience.

Advertisement

Far more simple, less clickbait answer, it remembers everything people say to it.

It seems odd, though. If you ask it to write scientific articles and cite them, it cites references from 2023. Not sure if it learned them from user input or training.

and with this, my journey on ghacks is over. have fun, shaun.

bye bye!

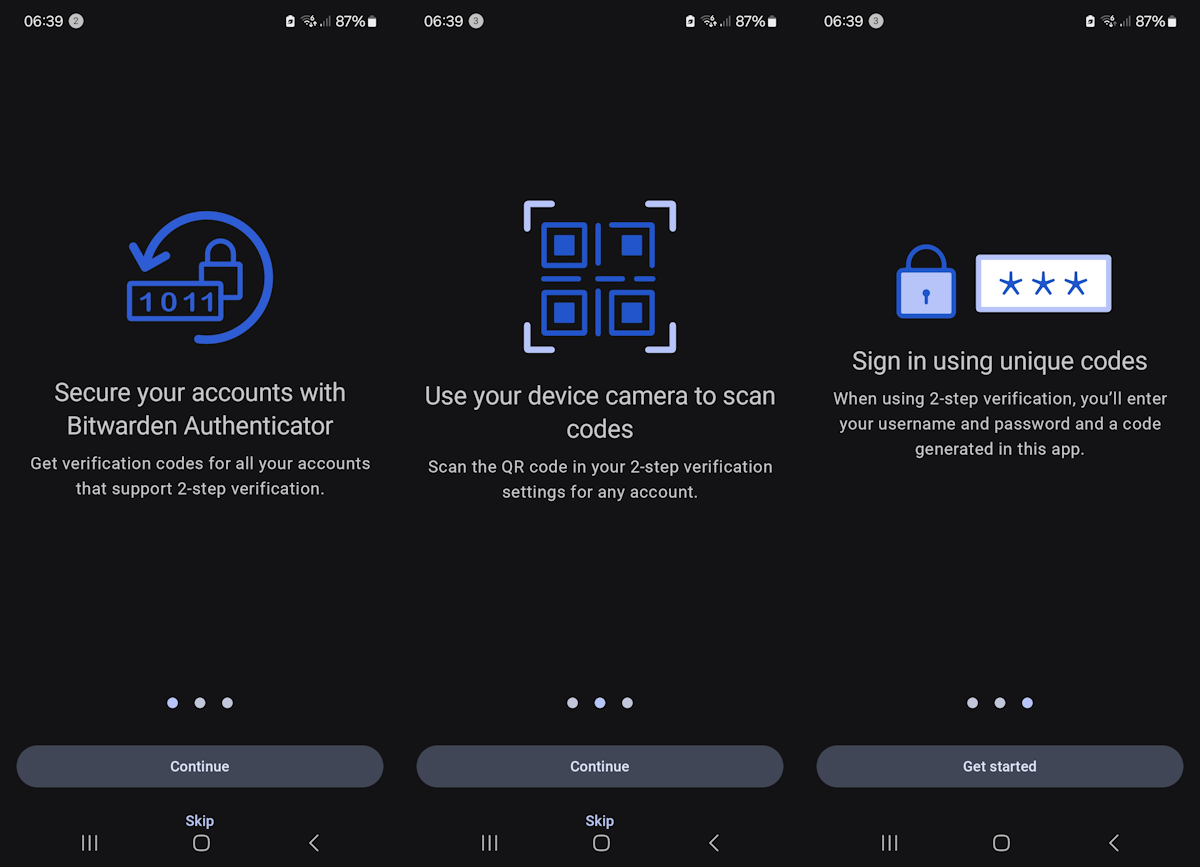

Was about to create an account to evaluate the AI by myself, when asked about email and my phone number to be sent a confirmation. I stopped at once and aborted the procedure. Why should i give my phone number again ? really ? to an AI and a company behind it ?